Databricks

Overview

Ternary's Databricks integration ingests usage and cost tables from your Databricks workspaces into the Reporting Engine. Once connected, you can analyze Databricks consumption alongside your existing multi-cloud integrations and cloud bills for a unified view of platform spend and usage patterns.

Supported Data Source

- Databricks Usage Tables (e.g.,

system.billing.usage) - Ternary reads the native usage tables exposed by Databricks to capture compute, storage and other resource consumption.

Data is ingested every four hours; you can trigger an update manually from the integration settings.

Prerequisites

-

Databricks Workspace Access

- You'll need a service principal in Databricks that has read-only access to the usage tables.

- Gather the following identifiers from your Databricks admin console:

- Account ID - GUID for your Databricks account.

- Workspace URL/Host - full URL of each workspace (e.g.,

https://adb-1234567890123456.17.azuredatabricks.net). - Warehouse ID - identifier of the warehouse used for queries.

- Service Principal ID (Client ID) - the numeric ID of the service principal.

- Service Principal UUID/Application ID (used for OAuth federation).

-

Permissions

- Assign read privileges on the usage tables (

system.billing.usageand related system tables) to the service principal. - Ensure the service principal can query the chosen warehouse(s).

- Configure an OAuth federation policy (see OAuth Federation section below).

- Assign read privileges on the usage tables (

-

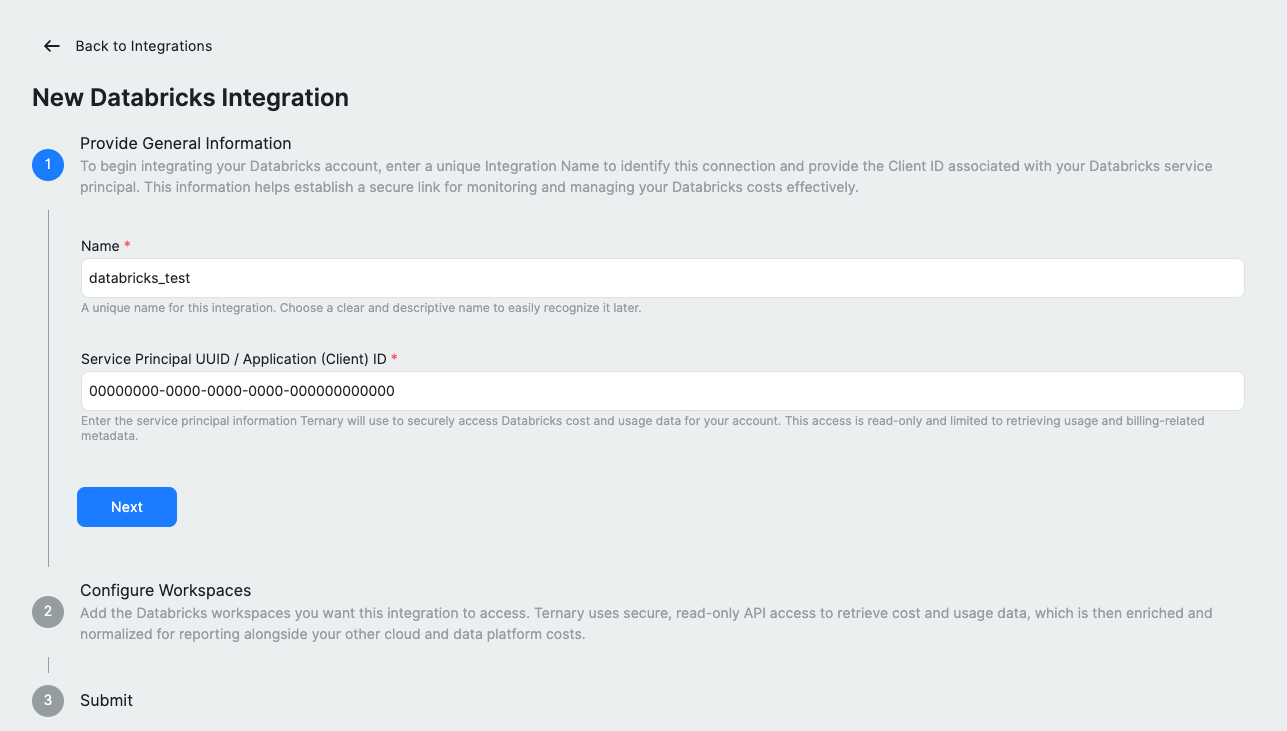

Naming Constraints

- Integration names cannot contain spaces. Use underscores or hyphens (e.g.,

databricks_test).

- Integration names cannot contain spaces. Use underscores or hyphens (e.g.,

Setup Instructions

1. Create a Service Principal

Create a dedicated service principal for Ternary in your Databricks account. Note the principal's Client ID and Application/UUID for use in Ternary. (For detailed steps on creating a Databricks service principal, see the Databricks documentation.)

2. Grant Permissions

Grant the service principal read access to the Databricks usage tables and ensure it can query the warehouse(s) you plan to integrate. You must also create an OAuth federation policy to allow the principal to authenticate (see OAuth Federation section below).

3. Add the Integration in Ternary

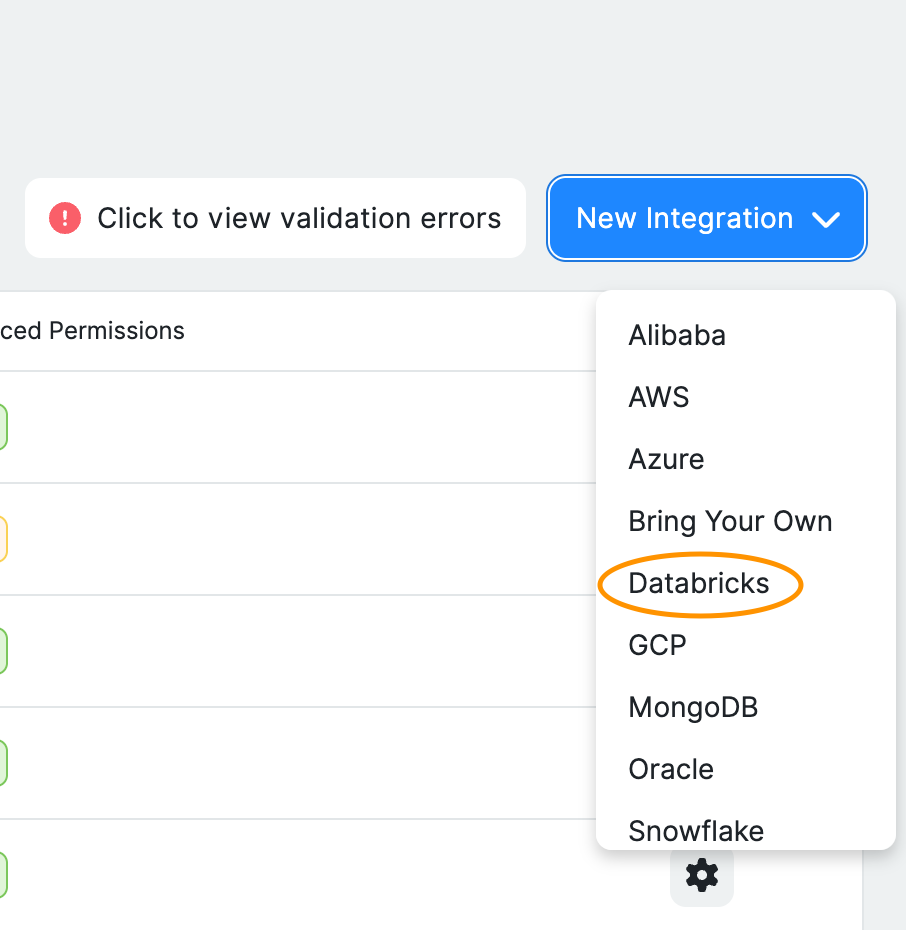

- Navigate to the Integrations page

- Sign into Ternary with Admin privileges and go to Admin -> Integrations.

- Click New Integration and select Databricks.

- Provide General Information

- Name: a unique identifier (no spaces).

- Service Principal UUID / Application (Client) ID: the GUID of your Databricks service principal.

- Click Next.

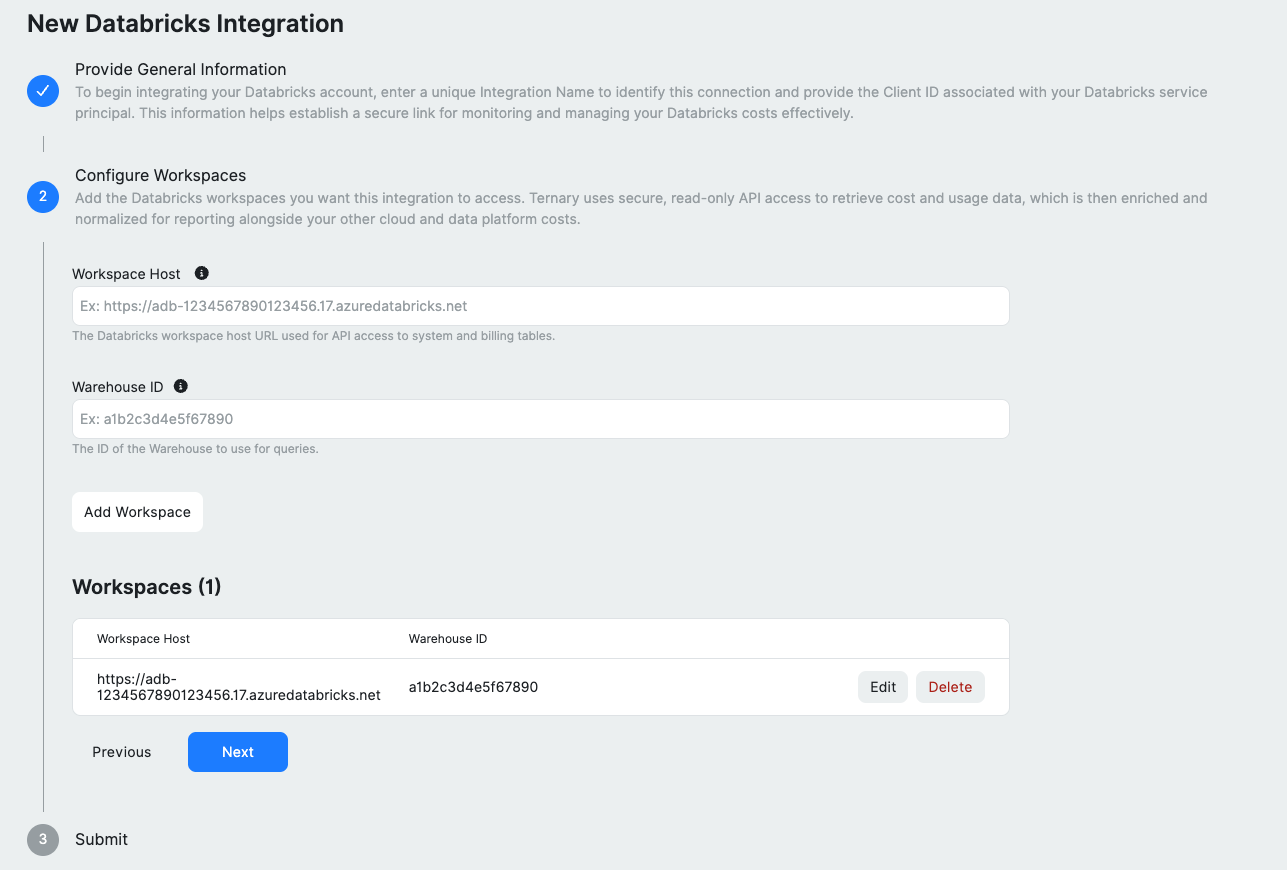

- Configure Workspaces

- For each workspace you want to ingest:

- Workspace Host: full workspace URL.

- Warehouse ID: identifier of the warehouse used for queries.

- Click Add Workspace. The workspace appears in a table where you can edit or delete entries.

- After adding at least one workspace, click Next.

- For each workspace you want to ingest:

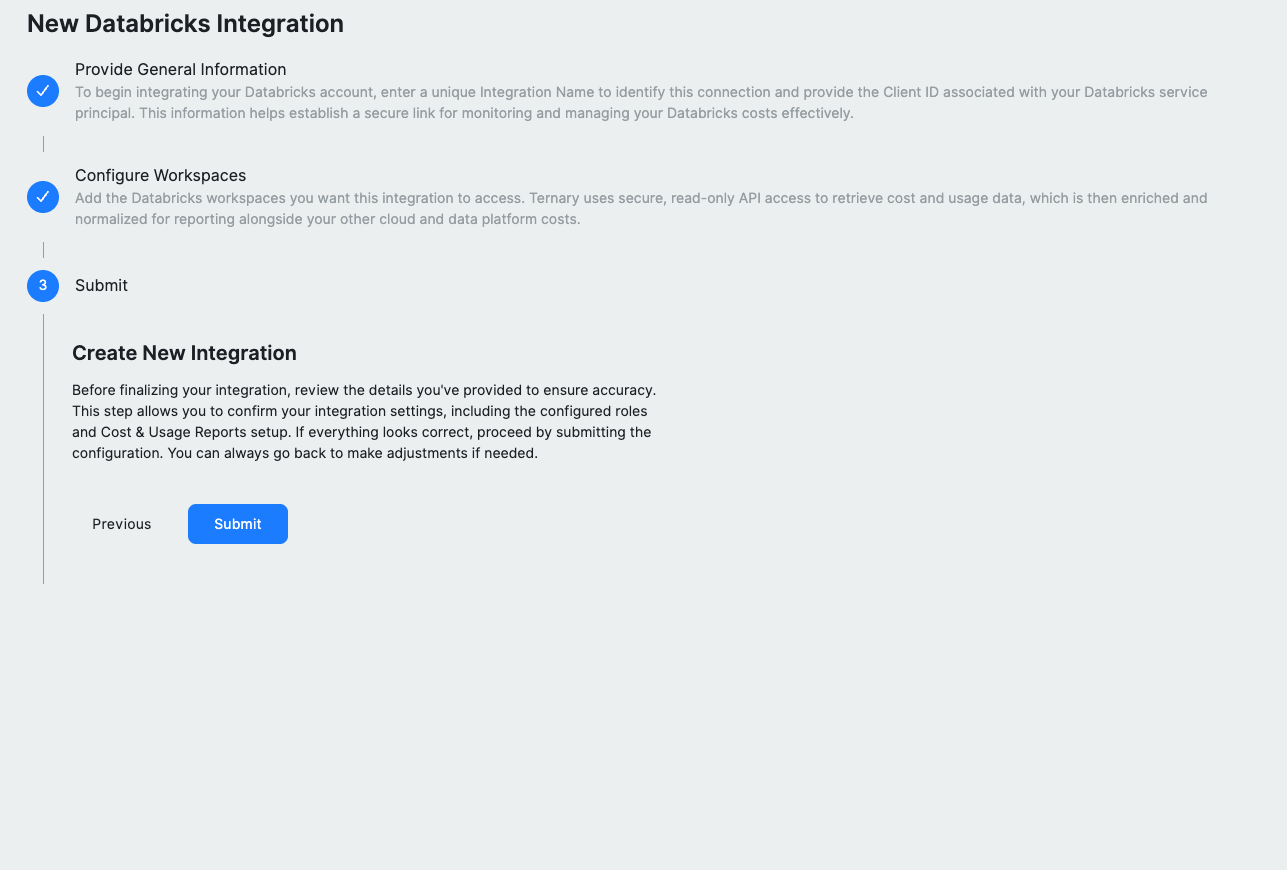

- Submit

- Review your configuration summary.

- Click Submit to initiate ingestion. Ternary will begin pulling data immediately and then every four hours thereafter.

Using Databricks Data in Reports

Once ingestion completes, your Databricks table show up as a new data source options in the Reporting Engine. You can:

- Group by clusters, resource types, tags or any other available columns.

- Track Databricks spending alongside other cloud costs in dashboards and reports.

To trigger a manual update, go to the integration's settings (gear icon) and click Request Billing Update.

Troubleshooting & Common Issues

| Issue | Potential Cause | Resolution |

|---|---|---|

| Authentication error | Service principal ID or token is incorrect/expired | Verify your Databricks service principal credentials and update them in the integration settings. |

| Missing date columns | Usage table lacks a start date/time field | Confirm that your Databricks usage table exposes a column like usageStartDate; at least one date column is required. |

| Federation policy error | OAuth federation policy not configured | See the OAuth Federation section below to configure the required federation policy. |

Important: Service Principal Scope

The service principal must be created at the Databricks account level, not just within a single workspace. Ensure it has access to every workspace and SQL warehouse you intend to ingest. Workspace-level service principals alone are insufficient.

Warehouse Requirements

- The SQL warehouse used for ingestion must support querying the

system.billing.*tables. - Auto-resume should be enabled so the warehouse starts when Ternary issues queries; ingestion will pause if the warehouse is stopped.

- Use a warehouse size appropriate for your workload (Serverless or Pro).

Billing Table Availability

Databricks system billing tables (e.g., system.billing.usage) are available only on supported plans and typically retain a limited history. Ternary can only ingest data that exists in these tables at the time of ingestion; historical backfill beyond Databricks' retention period is not possible.

OAuth Federation

OAuth federation is required for this integration; personal access tokens are not currently supported. You must configure a federation policy in Databricks to allow the Ternary service principal to authenticate.

Platform-specific documentation:

- Databricks on GCP: Configure a federation policy (GCP)

- Databricks on Azure: Configure a federation policy (Azure)

Using the Databricks CLI:

You can create the federation policy using the Databricks CLI:

databricks account service-principal-federation-policy create SERVICE_PRINCIPAL_ID --json '

{

"oidc_policy": {

"issuer": "https://accounts.google.com",

"audiences": ["databricks"],

"subject": "<YOUR_TERNARY_SERVICE_ACCOUNT_EMAIL>",

"subject_claim": "email"

}

}'Important: Replace

SERVICE_PRINCIPAL_IDwith your service principal's numeric ID (not the UUID). You can find this in the Databricks admin console under Service Principals.The

subjectshould be the service account email provided by Ternary during integration setup. This is displayed in the Ternary integration wizard (e.g.,[email protected]).

If validation fails with a federation policy error, check the error message for the expected policy configuration. Ternary validation will display the required issuer, subject, and audience values that your federation policy must include.

After Submission

After clicking Submit, initial ingestion may take several hours. You can monitor the ingestion status in the Ternary Admin -> Integrations -> Databricks screen. Data will begin appearing in the Reporting Engine once the first ingestion completes successfully. You can trigger an immediate update by clicking Request Billing Update in the integration settings.

Deleting the Integration

Deleting the Databricks integration removes the stored credentials and stops further ingestion. Historical data will be removed from all Ternary feature pages including reports, dashboards, budgets and forecasts.

FAQ

- What permissions are needed in Databricks? Assign read-only access on the usage tables to the service principal. Depending on your Databricks deployment, this may correspond to a built-in billing reader role or a custom policy with

SELECTprivileges. - How often is data refreshed? Every four hours by default. You can manually trigger an update via Request Billing Update in the integration settings.

- Can I delete the integration? Yes. Go to Admin -> Integrations, find the Databricks integration and remove it. This stops ingestion and removes all Databricks data from Ternary.

Updated 11 days ago